ChatGPT Web Search: Discovery or Grounding?

Maciej Czypek·Founder, aeoh

December 30, 2025

When we observe how ChatGPT interacts with the web, we often project our own habits onto it. We assume it functions like a classic search engine user: entering a keyword, scrolling through a list of blue links, and clicking to learn something new.

However, recent analysis of ChatGPT's search logs and web actions suggests a fundamental shift. In many high-volume queries, ChatGPT is not browsing to discover information. It is browsing just to ground information it, or its retrieval pipeline, already possesses.

This distinction between Discovery and Grounding is crucial for understanding how to position your brand or product to be recommended by AI in its answers. By analyzing specific search behaviors, we can decode the architecture behind the ChatGPT "Web Actor" and its "Retrieval Pipeline".

The Evidence: Grounding Over Discovery

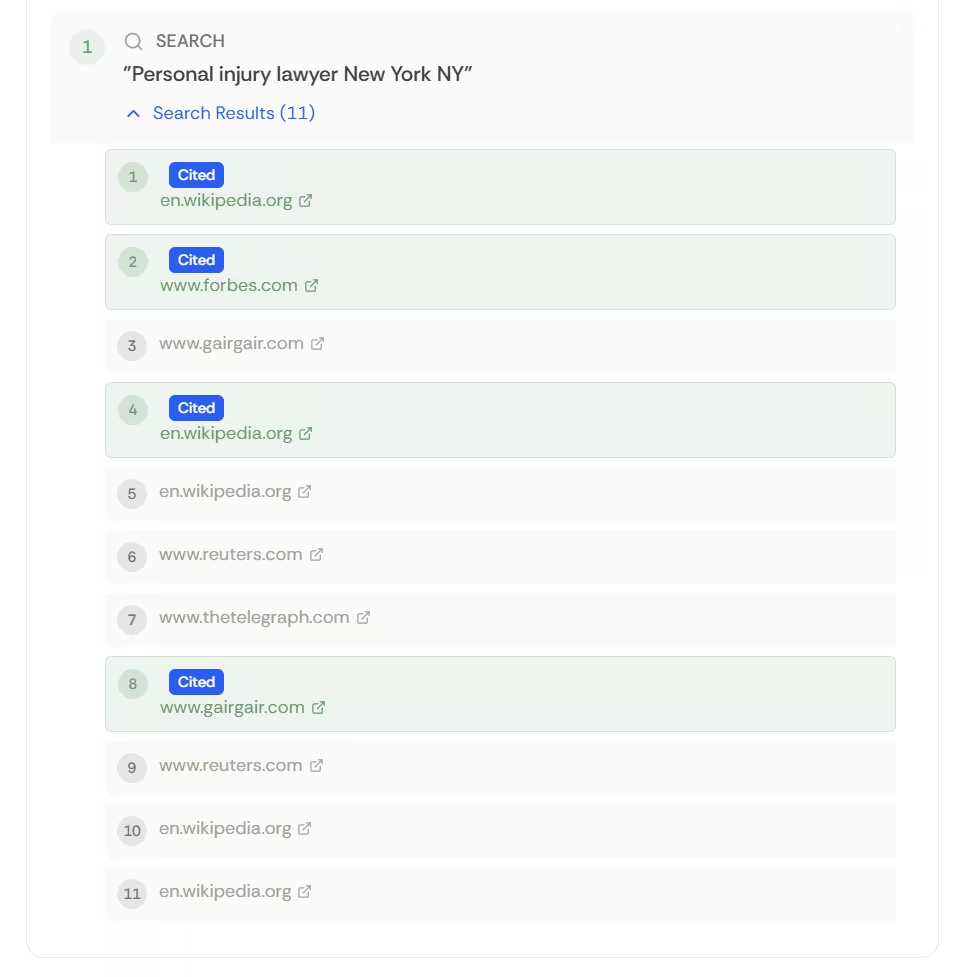

Let us look at a specific test case: a query for "Personal injury lawyer New York NY".

In a classic search environment (Google/Bing), this broad query would return a list of some results. However, that's not the case with ChatGPT's web search results - these contain very specific pages looking like ready-to-use options. Gair, Gair, Conason, Rubinowitz, Bloom, Hershenhorn, Steigman & Mackauf, and concrete profiles on Wikipedia. Crucially, ChatGPT did not perform deep web actions such as opening and reading links to vet these lawyers in real-time. Moreover, the response generation, including the search, occurs in 1-3 seconds.

This speed and precision imply that the ChatGPT Web Actor (the client-side agent) is not "reading" these websites live. Instead, it is receiving a pre-digested response from the OpenAI Retrieval Pipeline.

In this case, the search query acts as a mechanism for Grounding - fetching a URL to validate and annotate a response - rather than Discovery, where the model seeks to learn who the best lawyer is, like a normal web user would. The pipeline treats the query as a "task" to be completed, returning ready-to-use citations for entities that have high authority in the index.

The Proactive Pipeline

The "Grounding" hypothesis is further supported by ChatGPT's following, second search query: "Samer Habbas law Los Angeles personal injury". Here, AI (Web Actor) decided to find a specific lawyer. However, the results reveal something fascinating about OpenAI's Web Search backend pipeline:

- The search results return Samer Habbas's official site (as expected).

- The pipeline also returns Brian Breiter (another lawyer) with a ready-to-cite official link.

This indicates that the Retrieval Pipeline is not just a passive search index. It understands the broader semantic goal of the ChatGPT Web Actor and proactively steps out of line. Although the Web Actor requested a specific candidate, the backend provided that candidate, along with a high-authority alternative/addition.

The Two Modes: Fast Grounding vs. Deep Discovery

This behavior suggests ChatGPT operates in two distinct modes depending on the complexity of the query and the market depth.

1. Fast Mode: Grounding

For high-volume, well-documented queries (like lawyers in NY or LA), the model relies on Grounding. The Web Actor simply "pins" these citations to the generated text. It does not need to verify the content because the trust score of the source is high.

In this mode, ChatGPT relies heavily on pre-indexed, high-trust sources such as Forbes, Reuters, or, very often, Wikipedia. It trusts these domains implicitly. Therefore, when possible, it's worth tracking who OpenAI partners with regarding data accessibility. Such parties most likely become major annotations (citations) sources for web search grounding.

2. Agentic Mode: Discovery

For smaller, very specific, or novel markets, the behavior shifts to Discovery. Since the index lacks a "ready-to-cite" answer, the Web Actor must perform actual browsing. We see logs of actions such as open_page, reading content, and find_pattern.

In the absence of major, high-priority sources, the model, depending on the market, AI uses aggregators (like Yelp, Google Maps, Uber Eats, etc.), articles, social media posts, and other secondary websites. Moreover, the Web Actor must actively verify the information because the confidence in the source is lower. In such markets, it's crucial to have your primary, official business website, preferably with proper AEO optimizations.

Market Implications

Understanding whether ChatGPT is in "Grounding" or "Discovery" mode in your market is vital for being ultimately included in AI recommendations.

For Large, Competitive Markets

In these markets, the "Fast/Grounding" mode dominates. The pipeline prioritizes "Trusted Sources." What matters is being present in major, high-priority sources.

You need to be mentioned in the sources the pipeline trusts: major news outlets, Wikipedia, or top-tier industry publications. If you are not in the Knowledge/Authority Graph of these high-trust nodes, the pipeline will likely skip you for a "safer" recommendation like Gair Gair in our example.

For Small or Niche Markets

In smaller locations or very specific sub-niches, the "Discovery" mode is more likely to trigger.

Since major press coverage is unlikely, ChatGPT looks for consensus. Being present and highly rated on aggregators (Avvo, Yelp, Google Maps, depending on your market) is critical because the Web Actor uses these to "verify" your existence and reputation during its live browsing session. As already stated, ChatGPT will also try to confirm up-to-date information on your official, primary website.

Measure AI Recommendations in Your Market

Aeoh allows enterprise and data-driven teams to measure AI recommendations with our custom 30-day AI Answers Index solution. Using hundreds of ChatGPT search snapshots aggregated into 20+ metrics, we help businesses understand in which mode AI operates in their market, which sources have the strongest influence, and much more to make this new, non-deterministic distribution layer measurable.

Check your business visibility in AI

Generate an AI Visibility Report and discover if ChatGPT, Google Gemini and other LLMs recommend you.

Generate Report